Shaofeng Yin

About Me

I am currently a senior undergraduate at Tsinghua University, pursuing a degree in Information and Computing Science.

In terms of academic performance, I have maintained an average GPA of 4.0, ranking first in my major. I began my research under the supervision of Prof. Prof. Mingsheng Long, focusing on world modeling. I am currently visiting the Stanford Vision and Learning Lab, adviced by Prof. Jiajun Wu and Prof. C. Karen Liu, working on humanoid control. I have also had the pleasure of collaborating with Yanjie Ze and Jialong Wu.

My current research interests lie in humanoid control, generalizable world modeling, and robotic manipulation. I aim to build robust primitive skills for humanoids through sim-to-real reinforcement learning or by leveraging human data, and then integrate these skills with planning to accomplish complex tasks.

I am applying for a PhD position in 2026 Fall. Please drop me an email if you are interested in my research or just want to chat!

Invited Talks

- Visual Humanoid Loco-manipulation via Motion Tracking and Generation at Amazon Frontier AI & Robotics (FAR), Nov. 6, 2025. Slides

- Visual Humanoid Loco-manipulation via Motion Tracking and Generation at RoboPapers, Oct. 31, 2025. Slides

- World Models in Robotics: An Exploratory Perspective at Zhili Seminar, Mar. 16, 2024.

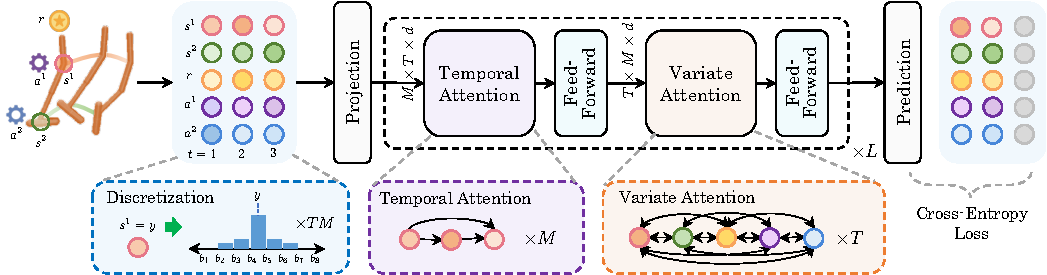

Research

-

ICML

International Conference on Machine Learning (ICML), 2025.

ICML

International Conference on Machine Learning (ICML), 2025. -

Neurlps

Conference on Neural Information Processing Systems (Neurlps), 2024.

Neurlps

Conference on Neural Information Processing Systems (Neurlps), 2024. -

Neurlps

Conference on Neural Information Processing Systems (Neurlps), 2025.

Neurlps

Conference on Neural Information Processing Systems (Neurlps), 2025.

Experience

.

Honors & Awards

- [2025] SenseTime Scholarship (Top 30 undergraduates in China)

- [2024] Grand Prize (Top Award), Student Academic Training Program, Tsinghua University (5/1852)

- [2024] Outstanding Poster Award, Student Academic Research Advancement Program, Tsinghua University (36/357)

- [2024] Comprehensive Excellence Award of Tsinghua University

- [2024] Spark Scientific and Technological Innovation Fellowship (top 1%, most prestigious and selective academic organization for students at Tsinghua University)

- [2023] The First Prize of (National) Regional College Students’ Physics Contest

- [2023] Comprehensive Excellence Award of Tsinghua University

- [2023] Outstanding Academic Scholarship of Tsinghua University

Education

- B.S. in Information and Computing Science, Tsinghua University, 2022-2026 (expected)

Zhili College, Tsinghua University

GPA: 4.0/4.0, Major Rank: 1/30.

Selected Courses:Numerical Analysis (A+), Students Research Training (A+), Artificial Neural Networks (A+), Principles of Signal Processing (A+), Abstract Algebra (A+), Discrete Mathematics (A+), Mathematical Programming (A), Introduction to Machine Learning (A), Measures and Integrals (A), Complex Analysis (A)

Projects

-

A five-stage pipelined RISC-V 32-bit processor featuring interrupt and exception handling, user-mode virtual address translation via page tables, and performance enhancements through I-Cache, D-Cache (Writeback), and TLB, with additional support for peripherals such as VGA and Flash.

A five-stage pipelined RISC-V 32-bit processor featuring interrupt and exception handling, user-mode virtual address translation via page tables, and performance enhancements through I-Cache, D-Cache (Writeback), and TLB, with additional support for peripherals such as VGA and Flash. -

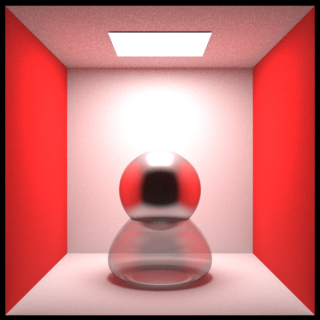

A compact renderer featuring Next Event Estimation, supporting Glossy Material (Disney Principal BRDF), Texture Mapping, Normal Mapping, Motion Blur, Normal Interpolation, Depth of Field, and Mesh Rendering (accelerated with BVH).

A compact renderer featuring Next Event Estimation, supporting Glossy Material (Disney Principal BRDF), Texture Mapping, Normal Mapping, Motion Blur, Normal Interpolation, Depth of Field, and Mesh Rendering (accelerated with BVH).

In submission

In submission